I thought I’d write about my experience about how I tried to use my smarts for being lazy (actually that might be a harsh word, it was more for flexibility, re-usability and maintainability) and how important it is for me, and for you, to realize when a design gets cumbersome and should be abandoned.

Now, many of the pieces I will talk about have some value and can be applied in other scenarios for sure. So, I advise you to focus on the concepts rather than the specific application of them in my case.

The design problem I had was to expose my data entities through a web service.

Following good design principles, I wanted to expose the data contracts, and not the underlying business entities and/or data schemas, although I just wanted to expose my (table) data. For e.g. I wanted to return a Person object which was essentially an entity object that mapped to a Person table, which is a standard CRUD scenario.

Now, I did not want to manually hand-code and duplicate the entity object properties and decorate them with Service attributes, so I thought I could do it dynamically by creating a service object (shell) which would inherit from the required entity object and control which properties were exposed.

A service object is serializable and that meant that I would need to dynamically figure out the parent properties and write them out. Clearly, I could not just use the XmlSerializer attribute and so I implemented the IXmlSerializable interface and added a Serializable attribute to the class.

[Serializable]

public class Person : PersonDb, IXmlSerializable

The IXmlSerializable member of importance to me was WriteXml, since i just had to return data.

Writing out xml for serialization is very simple. all you have to do is add the names and values:

public void WriteXml(System.Xml.XmlWriter writer)

{

writer.WriteElementString(“foo”, “foovalue”);

}

There were two mechanisms involved, first to figure out which properties/data needs to be exposed and how to get that data from the parent.

I figured this could be accomplished through a custom attribute, which specified what the (target) property name was and the (parent) property that returned the data.

[AttributeUsage(AttributeTargets.Class, AllowMultiple = true)]

public class SimpleFacadeAttribute : System.Attribute

{

public string PropertyName { get; set; }

public string XmlElementName { get; set; }

/// <summary>

/// Specify Class Property, Serialized Xml Element will have the same name

/// </summary>

/// <param name=”basePropertyName”></param>

public SimpleFacadeAttribute(string PropertyName)

{

this.PropertyName = PropertyName;

this.XmlElementName = PropertyName; //use base prop name

}

/// <summary>

/// Specify Base Class Property and Target Xml Element Name

/// </summary>

/// <param name=”basePropertyName”></param>

/// <param name=”targetPropertyName”></param>

public SimpleFacadeAttribute(string PropertyName, string XmlElementName)

{

this.PropertyName = PropertyName;

this.XmlElementName = XmlElementName;

}

}

This itself is a valuable pattern, although I have the solution, not the problem :), whereby the output can be specified by adding attributes to the Class. If only the source property name (parent property) is specified, the same name is emitted; alternatively you could specify an alias.

[SimpleFacadeAttribute(“Address1”, “Line1”)]

[SimpleFacadeAttribute(“City”)]

[SimpleFacadeAttribute(“State”)]

[SimpleFacadeAttribute(“ZipCode”, “Zip”)]

[Serializable]

public class Person : PersonDb, IXmlSerializable

Ok, now I had to implement the writing, which I did by looping through the attributes and calling the parent property to get the value via Reflection. In fact, I wrote a generic method, so it could be called by other classes too.

public static void SerializeSimple<T>(T source, System.Xml.XmlWriter writer)

{

Type myType = source.GetType();

//go through ServiceFacade attributes and serialize them

object[] attributes = myType.GetCustomAttributes(false);

foreach (object attrib in attributes)

{

if (attrib.ToString() == SIMPLE_FACADE_ATTRIB)

{

//get current attribute

SimpleFacadeAttribute sfAttrib = (SimpleFacadeAttribute)attrib;

//get value of property specified

System.Reflection.MethodInfo getMethod = myType.GetProperty(sfAttrib.PropertyName).GetGetMethod();

object val = getMethod.Invoke(source, null);

string value = val == null ? “” : val.ToString();

writer.WriteStartElement(sfAttrib.PropertyName);

writer.WriteString(value);

writer.WriteEndElement();

}

}

}

Note, the const SIMPLE_FACADE_ATTRIB = “SimpleFacadeAttribute”, the custom attribute class name.

Ok, so we are dynamically serializing an object, but then comes the next hurdle. How is this going to be defined in a contract? Most likely WSDL. So, I needed to generate WSDL for this class. Now, it is not dynamic in the sense of “changing” or morphing whenever it’s parent changes because the custom attributes control the definition; just that it’s being produced at run-time.

There were 2 requirements for the WSDL, generation and publication.

The generation is what made me realize that my initial goal of making things simpler and writing less code was not achievable. This is because I did not find an easy way to generate the WSDL elements like I did with serialization, for e.g. for starters, the Name had to be specified as a string

<s:element minOccurs=”1” maxOccurs=”1” name=”name” type=”s:string” />

and also all the elements had to be tied together as a sequence, document definition, etc. and I had no desire, nor will I ever, to write all the code to generate it.

It was time to abandon the pattern…

Conceptually, I thought I could emit the required class and use wsdl.exe to produce its wsdl. Fyi, this related article by Craig from Pluralsight is pretty good:

http://www.pluralsight.com/community/blogs/craig/archive/2004/10/18/2877.aspx

He uses the ServiceDescriptionReflector class to generate the wsdl.

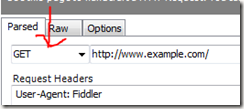

Now, out of curiosity, I had to figure out how publication of the WSDL might work. The standard for obtaining a WSDL from a web service is by appending ?wsdl to its URI, e.g. http://personservice.com?wsdl

In my case using an asp.net (asmx) service, the engine produces the wsdl (I need to research this). But essentially, I would have to implement an HttpHandler that intercepted the request and returned the required wsdl.

Taking a step back, I realize that perhaps writing an add-in or tool to generate the code for the service class from different classes might be valuable. So, my Person class might map to PersonDb and Address and I could have a tool select the classes and properties and spit out code. To make this process repeatable, I could use my custom attributes to specify the mapping.

This approach also makes everything strongly-typed since nothing (like property names used in Reflection, etc.) is dynamic at run time.

This would be a cool tool to have in Visual Studio too. Ah, time to go back to the drawing board…